Multicast Reverse Path Forwarding

One of the key differences between unicast and multicast is that for unicast routing we only care about where the destination is located and how to get there. For multicast routing we care about where the source is located. PIM (Protocol Independent Multicast) uses the unicast routing table to check what interface will be used to reach the source.

PIM will only accept multicast packets on an interface that we use to reach the source. If we receive multicast packets on an interface that we don’t use to reach the source, we will drop the multicast packets! This is called a RPF failure

Introduction:

In normal routing i.e. in Unicast routing packet forwarding decisions are typically based on the destination address of the packet arriving at a router. The unicast routing table is organized by destination subnet and mainly set up to forward the packet toward the destination.In IP multicast routing, the router forwards the packet away from the source to make progress along the distribution tree and prevent routing loops. The router's multicast forwarding state runs more logically by organizing tables based on the reverse path, from the receiver back to the root of the distribution tree. This process is known as reverse-path forwarding (RPF).

In short, Incoming multicast packet will not be accepted/forwarded unless it is received on an interface that is the outgoing interface for unicast route to the source of the packet.

Configuration Example:

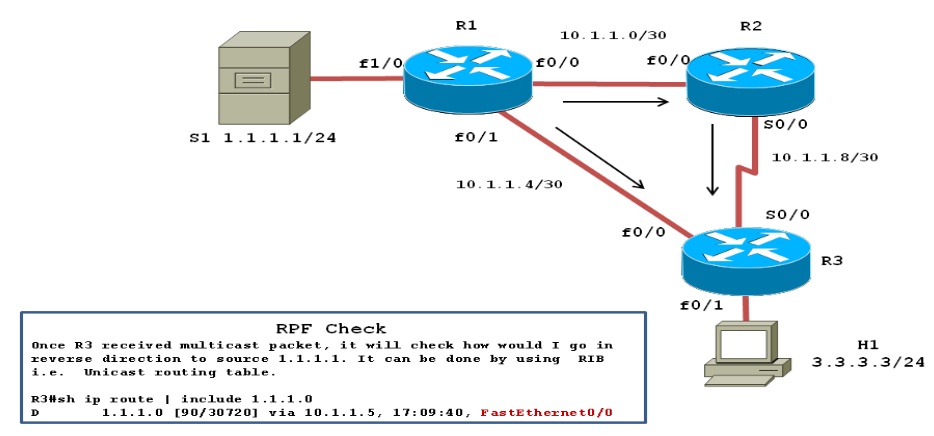

In below example multicast server S1 sends a multicast packet, with R1 flooding it to R2 and R3.R2 received its copy, and floods it as well. As a result R3 receives the same packet from two routers:a) On its interface fa0/0 from R1.

b) On its interface s0/0 from R2.

Topology Diagram:

Without the RPF check, R3 would forward the packet it got from R1 to R2, and vice versa, and begin the process of looping packets also with the same logic, R1 and R2 also keep repeating the process. This duplication creates multicast routing loops and generates multicast storms that waste bandwidth and router resources.

Before I dive into multicast configuration, let me share with you the initial configuration of our network. All relevant configurations are below.

| R1 | R2 | R3 |

|---|---|---|

| hostname R1 ip cef ! ip multicast-routing ! interface FastEthernet1/0 ip address 1.1.1.1 255.255.255.0 ip pim dense-mode ! interface FastEthernet0/0 ip address 10.1.1.1 255.255.255.252 ip pim dense-mode speed 100 full-duplex ! interface FastEthernet0/1 ip address 10.1.1.5 255.255.255.252 ip pim dense-mode speed 100 full-duplex ! router eigrp 1 network 1.1.1.1 0.0.0.0 network 10.1.1.0 0.0.0.255 no auto-summary | hostname R2 ! ip multicast-routing ! interface FastEthernet0/0 ip address 10.1.1.2 255.255.255.252 ip pim dense-mode speed 100 full-duplex ! interface Serial0/0 ip address 10.1.1.9 255.255.255.252 ip pim dense-mode clock rate 2000000 ! router eigrp 1 network 10.1.1.0 0.0.0.255 no auto-summary ! | hostname R3 ! ip cef ! ip multicast-routing ! interface FastEthernet0/0 ip address 10.1.1.6 255.255.255.252 ip pim dense-mode no ip route-cache no ip mroute-cache speed 100 full-duplex ! interface FastEthernet0/1 ip address 3.3.3.3 255.255.255.0 ip pim dense-mode ip igmp join-group 239.1.1.1 ! interface Serial0/0 ip address 10.1.1.10 255.255.255.252 ip pim dense-mode no ip route-cache no ip mroute-cache clock rate 2000000 ! router eigrp 1 network 3.3.3.3 0.0.0.0 network 10.1.1.0 0.0.0.255 no auto-summary ! |

When R3 performs the RPF check the following things will happen:

1) R3 examines the Source address of each incoming packet, which is 1.1.1.1.

2) R3 determines the reverse path interface based on its route used to forward packets to 1.1.1.1

In our case R3's route to 1.1.1.1/24 is matched, and it is lists an outgoing interface fa0/0, making fa0/0 R3's RPF interface for IP address 1.1.1.1

R3#sh ip route | beg Gate

Gateway of last resort is not set

1.0.0.0/24 is subnetted, 1 subnets

D 1.1.1.0 [90/156160] via 10.1.1.5, 02:01:51, FastEthernet0/0

3.0.0.0/24 is subnetted, 1 subnets

C 3.3.3.0 is directly connected, Loopback0

10.0.0.0/30 is subnetted, 3 subnets

C 10.1.1.8 is directly connected, Serial0/0

D 10.1.1.0 [90/30720] via 10.1.1.5, 04:24:40, FastEthernet0/0

C 10.1.1.4 is directly connected, FastEthernet0/0

R3#sh ip rpf 1.1.1.1

RPF information for ? (1.1.1.1)

RPF interface: FastEthernet0/0

RPF neighbor: ? (10.1.1.5)

RPF route/mask: 1.1.1.0/24

RPF type: unicast (eigrp 1)

RPF recursion count: 0

Doing distance-preferred lookups across tables

R3#sh ip mroute | beg Interfac

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.1.1.1), 00:38:46/stopped, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Loopback0, Forward/Dense, 00:38:46/00:00:00

FastEthernet0/0, Forward/Dense, 00:38:46/00:00:00

Serial0/0, Forward/Dense, 00:38:46/00:00:00

(1.1.1.1, 239.1.1.1), 00:00:26/00:02:37, flags: LT

Incoming interface: FastEthernet0/0, RPF nbr 10.1.1.5

Outgoing interface list:

Loopback0, Forward/Dense, 00:00:26/00:00:00

Serial0/0, Prune/Dense, 00:00:26/00:02:34, A

3) R3 compares the reverse path interface fa0/0 on which multicast packet arrives .If they match, it accepts the packets and forward it; otherwise ,it drops the packet .In this case ,R3 floods the packets received on fa0/0 from R1 but it ignore the packets received on s0/0 from R2.

Verification:

1) To verify we will be sending ICMP echo to group 239.1.1.1 from R1 with source 1.1.1.1.It's always safe to collect debugging logs in buffer rather than on console hence we will be debugging multicast packet and collect it in logging buffer as shown below:

R3#conf t

Enter configuration commands, one per line. End with CNTL/Z.

R3(config)#logging console informational

R3(config)#logging buffer 7

R3(config)#logging buffer 64000

R3(config)#no ip cef

R3(config)#end

*Mar 1 04:44:41.670: %SYS-5-CONFIG_I: Configured from console by console

R3#debug ip mpacket

IP multicast packets debugging is on

R1#ping 239.1.1.1 source 1.1.1.1

Type escape sequence to abort.

Sending 1, 100-byte ICMP Echos to 239.1.1.1, timeout is 2 seconds:

Packet sent with a source address of 1.1.1.1

Reply to request 0 from 10.1.1.6, 24 ms

Reply to request 0 from 10.1.1.6, 128 ms

R3#sh logging | beg Log

Log Buffer (64000 bytes):

IP(0): s=10.1.1.5 (FastEthernet0/0) d=239.1.1.1 (Serial0/0) id=19, ttl=254, prot=1, len=100(100), mforward

IP(0): s=10.1.1.1 (Serial0/0) d=239.1.1.1 id=19, ttl=253, prot=1, len=104(100), not RPF interface

IP(0): s=10.1.1.5 (FastEthernet0/0) d=239.1.1.1 (Serial0/0) id=20, ttl=254, prot=1, len=100(100), mforward

IP(0): s=10.1.1.1 (Serial0/0) d=239.1.1.1 id=20, ttl=253, prot=1, len=104(100), not RPF interface

IP(0): s=1.1.1.1 (FastEthernet0/0) d=239.1.1.1 (Serial0/0) id=20, ttl=253, prot=1, len=100(100), mforward

IP(0): s=1.1.1.1 (Serial0/0) d=239.1.1.1 id=20, ttl=252, prot=1, len=104(100), not RPF interface

From the above logs we can see that R3 forwarded the packets received on fa0/0 from R1 but it ignore the packets received on s0/0 from R2.

2) Let’s look it same with mtrace from R1 and capturing packet with the help wireshark on R3’s interface Fa0/0 and S0/0.

R1#mtrace 1.1.1.1 3.3.3.3 239.1.1.1

Type escape sequence to abort.

Mtrace from 1.1.1.1 to 3.3.3.3 via group 239.1.1.1

From source (?) to destination (?)

Querying full reverse path...

0 3.3.3.3

-1 10.1.1.6 PIM [1.1.1.0/24]

-2 10.1.1.5 PIM [1.1.1.0/24]

-3 1.1.1.1

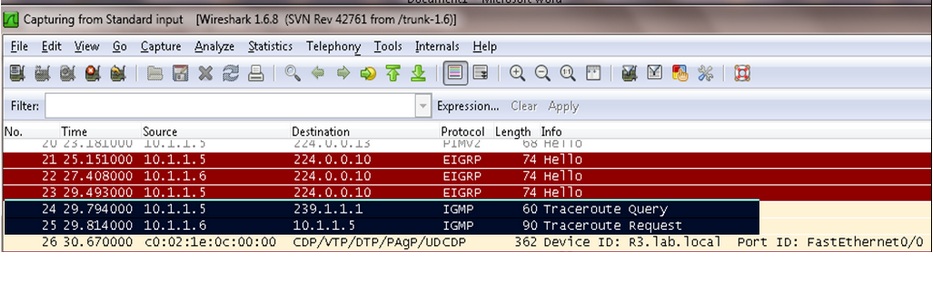

On R3’s interface fa0/0 we capture trace route query and request as mark in black box below diagram:

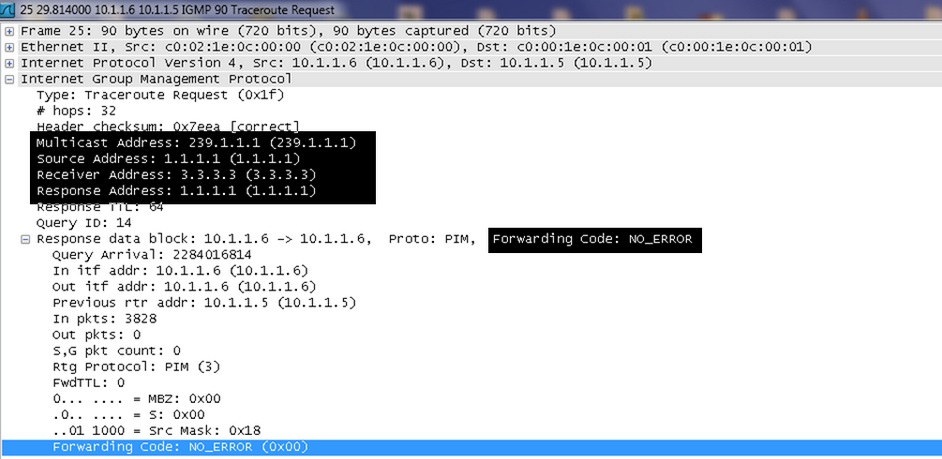

Let's open the traceroute request packet to get more detail inside view.

As show in above figure “FORWARDING CODE: NO_ERROR” field shows that after the router receives a multicast packet it performed an RPF check as the RPF check succeeds, the packet is forwarded.

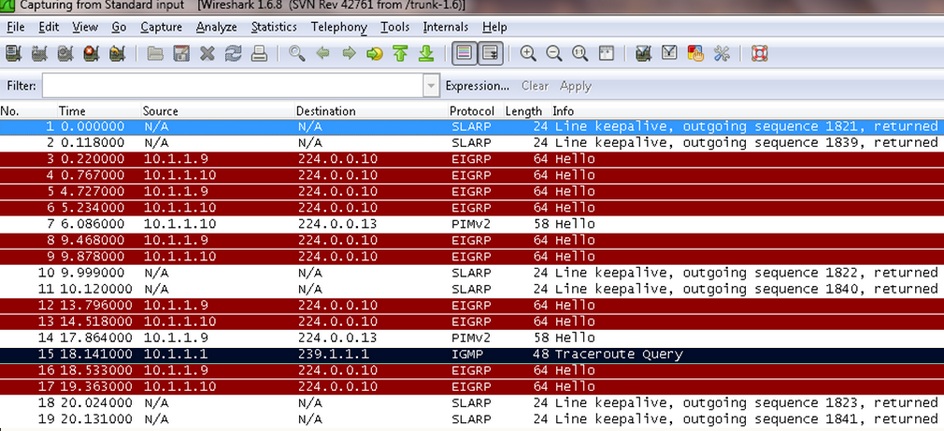

Now let’s view capture taken on interface S0/0:

It is only showing trace route query not request as packets are drop due to RPF check failure.

Hence conclusion is the RPF check is a strategy by which router accept packets that arrives over the shortest path and discard those that arrive over longer routes and thereby avoid routing loops and duplication.